Delphi Digita: The industry's most advanced Guide to Ethereum

The Hitchhiker's Guide to Ethereum.

Original author: Jon Charbonneau

xinyang@DAOrayaki.org, DoraFactory

Key points:

Ethereum is the only major protocol intended to build a scalable unified layer of settlement and data availability

Rollup expands the computation while taking advantage of Ethereum's security

All roads lead to the end game of centralized block production, decentralized untrusted block validation, and censorship resistance.

Innovations such as originator builder separation and weak statelessness bring about a separation of authority (build and verify) that can achieve scalability without sacrificing security or decentralization goals

Mevs are now front and center -- many designs are planned to mitigate their hazards and prevent their centralization

Danksharding combines multiple approaches from cutting-edge research to provide the scalable base layer needed for Ethereum's rollup-centric roadmap

I do expect Danksharding to be implemented in our lifetimes

directory

Part 1 The Road to Danksharding

Original data sharding design - independent sharding proposal

Data Availability Sampling (DAS)

KZG Commitment

KZG promise vs. proof of fraud

The originator and builder in the protocol are separated

Censorship Resistance List (crList)

2 d KZG strategy

Danksharding

Danksharding -- Honest majority verification

Danksharding - reconstruction

Danksharding - Private random sampling of malicious majority security

Danksharding -- Key summary

Danksharding -- Constraints on blockchain expansion

Native Danksharding (IP-4844)

Multi-dimensional IP-1559

Part 2 history and state management

Calldata Gas Cost reduction and Calldata volume Limit (IP-4488)

Qualify historical data in executing clients (IP-4444)

Restoring historical Data

Weak Statelessness

Verkle Tries

State overdue

Part 3 Everything is MEV's pot

Today's MEV supply chain

MEV-Boost

Committee-driven MEV Smoothing

Single-slot Finality

Single Secret Leader Election

Part 4 The secret of the merger

The combined client

Conclusion moment

Introduction to the

I've been pretty skeptical about the timing of the merger ever since Vitalik said that someone born today has a 50-75% chance of living to the year 3000 AD, and he wants to live forever. But let's take a closer look at Ethereum's ambitious roadmap.

(After 1000 years)

This article is not for fast food. If you want a broad and detailed view of Ethereum's ambitious roadmap, please give me an hour and I'll save you months of work.

There's a lot to keep track of in ethereum research, but everything ultimately intertwinswith one overarching goal -- scaling up computation without sacrificing decentralized validation.

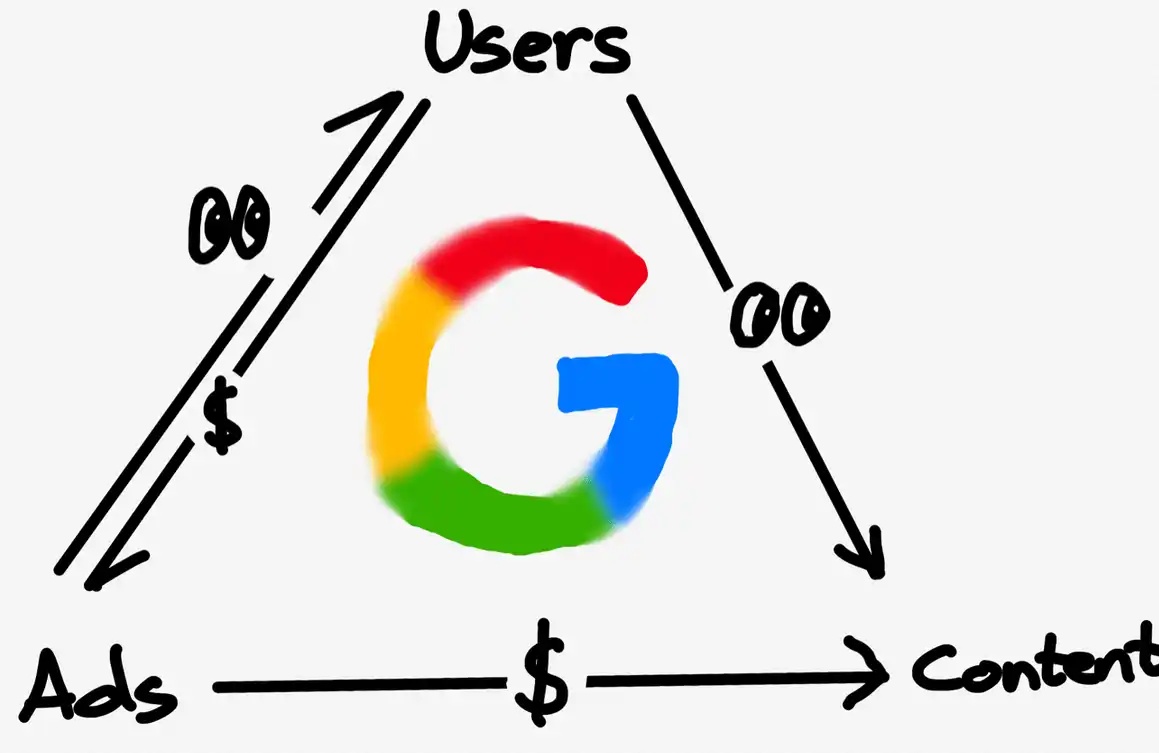

Vitalik has a famous "endgame" that I don't know if you've heard. He acknowledges that ethereum expansion needs some centralization. In blockchain, the C for centralization is scary, but also a reality. We just need decentralized and trust-free validation to control that power. There is no compromise here.

Professionals will add to L1 and beyond. Ethereum maintains incredible security through simple decentralized validation, while Rollup inherits its security from L1. Ethereum then provides billing and data availability, allowing rollup to scale. All the research here is ultimately aimed at optimizing these two roles and, at the same time, making full validation of blockchain easier than ever.

The following terms are repeated about seven, eight, fifty-nine times:

DA - Data Availability Data Availability

DAS - Data Availability Sampling

PBS -- Proposer- Builder Separation the initiator and builder are separated

PDS -- proto-Danksharding Native danksharding

DS -- Danksharding a sharding design for Ethereum

PoW - Proof of Work

PoS - Proof of Stake

Part 1 The Road to Danksharding

Hopefully you've heard that Ethereum has moved to a rollup-centric roadmap. There will be no more execution sharding -- Ethereum will optimize data-demanding rollups instead. This is done through data sharding (a somewhat Ethereum scheme) or larger blocks (Celestia's scheme).

The consensus layer does not interpret sharded data. It has only one job -- to make sure the data is available.

I will assume that you are familiar with basic concepts such as rollup, fraud, and ZK proof, and why DA (data availability) is important. If you're not familiar with it or just need a refresher, check out Can's recent Celestia report.

Original data sharding design - independent sharding proposal

The design described here has been deprecated, but it's worth knowing for background. I'll call it "Sharding 1.0" for simplicity.

Each of the 64 shards has a separate proposal and committee approved in turn from a collection of validators. They separately verify that their shard data is available. Initially it will not be DAS (Data availability sampling) - it relies on the honest majority of each shard's set of validators to download the data completely.

This design introduces unnecessary complexity, a worse user experience, and a vehicle for attack. Regrouping verifiers between shards can be risky.

Unless you introduce very strict synchronization assumptions, it is also difficult to guarantee that voting will be done in a single slot. The Beacon block proposal requires the gathering of votes from all individual committees, and there may be delays.

(Original data sharding design, each sharding is confirmed by committee voting, voting is not always completed in a single slot, sharding can confirm up to two epochs)

DS (Danksharding) is completely different. The verifier performs DAS to verify that all data is available (no more separate sharding committees). A specialized Builder uses Beacon blocks to create a large block together with all the fragmented data and confirms it. Therefore, PBS (proposal and Builder separation) is necessary for DS to remain decentralized (building that large block together is resource intensive).

Data Availability Sampling (DAS)

Rollups will publish a lot of data, but we don't want to burden the nodes with downloading all the data. This would mean high resource allocation to the detriment of decentralization.

In contrast, DAS allows nodes (and even lightweight clients) to easily and securely verify that all data is available without having to download all of it.

Naive solution - just check a random part of the block. If it's okay, just sign it. But what if you miss out on a deal that will cost you all your ETH to some bad guy? Can this be safu?

Clever solution -- first erase and encode the data. The data is extended using reed-Solomon codes. This means that the data is interpolated as a polynomial, which we can then evaluate elsewhere. This is a little bit complicated, so let's break it down.

Don't worry about your math. This is crash course. (I promise the math isn't that scary here -- I had to watch some Khan Academy videos to write these parts, but even I get it now).

Polynomials are made up of a finite number of. An expression for adding formulas of form. The number of terms represents the highest exponent. For example, + & NBSP; + Minus 4 is a cubic polynomial. You can get it from any of the variables located on the polynomial. Coordinates to reconstruct any Degree polynomial.

Now let's look at a concrete example. So here we have four blocks. These blocks of data can be mapped to a polynomial at a given point. The value of the. For example, =. And then you find the lowest degree polynomial that satisfies those numbers. Since these are four blocks, we can find polynomials of degree three. We can then extend this data by adding four values that are on the same polynomial.

Remember that key polynomial property -- we can reconstruct it from any four points, not just our first four blocks.

Back to our DAS. Now we just need to make sure that any 50% (4/8) of the erasure encoded data is available. From this, we can reconstruct the entire block of data.

Therefore, an attacker would have to hide more than 50% of the data blocks in order to successfully trick the DAS node into thinking that the data was available (it wasn't).

After many successful random samples, < The probability of 50% of the data being available is very small. If we successfully sample the erase-encoded data 30 times, then There's a 50% chance that it's available.

KZG Commitment

Okay, so we did a bunch of random samples, and they were all available. But we still have a question -- is the data erasure coded correctly? Otherwise, maybe the block creator just added 50% garbage when he expanded the block, and our sampling was for nothing. In this case, we would not actually be able to reconstruct the data.

Often, we just promise a lot of data by using Merkle root. This is valid for proving that some data is contained within a set.

However, we also need to know that all the original and extended data are on the same low-degree polynomial. Merkelgen cannot prove it. Therefore, if you use this scheme, you will also need proof of fraud to prevent possible omissions.

(Merkle roots allow us to commit to data and extensions of data, but it doesn't tell us whether they fall on the same low-degree polynomial.)

Developers can solve this problem in two directions:

- Celestia is going down the fraud certification route. This scheme requires someone to watch and submit a fraud certificate to alert everyone if the block is incorrectly erasing the code. This requires standard honest minority assumptions and synchronization assumptions (that is, in addition to someone sending me a fraud certificate, I also need to assume that I am connected and will receive it within a limited time).

- Ethereum and Polygon Avail are going a new route -- KZG commitments (aka Kate Commitments). This dispenses with honest minority assumptions and synchronization assumptions to keep proof of fraud secure (although they still exist and are used for reconstruction, which we'll talk about shortly).

Other options are available, but less popular. You can use the ZK-proofs, for example. Unfortunately, they are computationally impractical (for now). However, they are expected to improve over the next few years, so ethereum is likely to switch to STARKs in the future as KZG promises not to be quantum-resistant.

(I don't think you're ready for post-Quantum STARKs yet, but your next generation will love it.)

Going back to KZG commitments -- these are polynomial commitment schemes.

A commitment scheme is simply an encryption method that makes a commitment to some numerical value verifiable. The best analogy is to put a letter in a locked box and hand it to someone else. Once put in, the letter cannot be changed, but it can be opened and authenticated with a key. You promised this letter, and the key is proof.

In our case, we map all the raw and extended data onto an X,Y grid, and then find the polynomial that matches them to the minimum degree (a process known as Lagrange interpolation). This polynomial is what the certifier promises.

KZG promises to give us access to data. And data extension & NBSP; And prove that they fall on the same polynomial of low degree.)

A few key points:

- First we have a polynomial

- Witness the formation of the person's commitment to this polynomial

- This depends on the trust Settings of elliptic curve cryptography. For how this works, check out a great series of tweets from Bartek.

- For any value of this polynomial. The certifier may calculate a piece of evidence

- Speaking human: The certifier submits these fragments to any verifier, who can verify that the value of a point (where the value represents the data behind it) is placed correctly on the submitted polynomial.

- This proves that our extension to the original data is correct, because all the values are on the same polynomial

- Note: The validator does not need to use polynomials

- Important attributes -- conformance & NBSP; The commitment size (size), the proof size, and Validation time of. Even for the certifier, commitment and proof are only produced. Where It's the degree of the polynomial.

- Speaking human: Even if (the number of values) increases (i.e., the dataset increases as the shard size increases) -- the size of the commitment and proof remains the same, the amount of work required for validation is constant.

- commitment & have spent And prove & have spent Are only one of the Pairing Friendly Curves (blS12-381 in this case). In this case, they are only 48 bytes each (really small).

- Thus, the certifier's commitment to a large amount of raw and extended data (expressed as many values on a polynomial) will still be only 48 bytes, and the proof will be only 48 bytes

- In simpler terms: it scales quite well

Here, the KZG root (a polynomial promise) is analogous to the Merkle root (a vector promise).

The raw data is polynomial & NBSP; In & have spent To & have spent Then we pass the value of the position in To & have spent Evaluate the polynomial to extend it. All the points. To & have spent They're all on the same polynomial.

In a word: DAS allows us to check whether erase-encoded data is available. KZG promises to prove to us that the original data is properly extended and promises all the data.

Well, that's the end of all algebra.

KZG promise vs. proof of fraud

Now that you know how KZG works, let's step back and compare the two approaches.

The disadvantage of KZG is that it will not be post-quantum-safe, and it requires a trusted initialization. This is not worrying. STARKs offers a post-quantum alternative, whereas trusted initialization (open participation) requires only one honest participant.

The advantage of KZG is that it has lower latency compared to the fraud proof setup (although, as mentioned earlier, GASPER does not have a fast end result in any case), and it ensures proper code erasing without introducing the synchronization and honest minority assumptions inherent in fraud proof.

However, considering ethereum still reintroduces these assumptions in block reconstruction, you don't actually remove them. The DA layer always needs to assume that blocks are initially available, but then nodes need to communicate with each other to put them back together. This reconstruction requires two assumptions:

You have enough nodes (light or heavy) sampling the data so that they have enough power to combine the data. This is a fairly weak, inescapable honest minority assumption and not something to worry about too much.

The synchronization hypothesis was reintroduced -- nodes need to communicate for a certain amount of time before they can be put back together.

Ethereum validators download shard data completely in PDS (native Danksharding), whereas for DS, they only do DAS (download specified rows and columns). Celestia will require the verifier to download the entire block.

Note that in both cases we need synchronous assumptions for reconstruction. In cases where only part of the block is available, the complete node must communicate with other nodes to join it together.

The delay advantage of KZG would come to light if Celestia were to switch from requiring verifiers to download the entire data to just doing DAS (though such a shift is not currently planned).

Then, they also need to fulfill the KZG promise -- waiting for proof of fraud means a significant increase in block spacing, and the risk of a verifier voting for a miscoded block is high.

I recommend reading the following articles to learn more about how KZG promises work:

The (relatively easy to understand) basics of elliptic curve cryptography

Explore elliptic curve pairing by Vitalik

KZG polynomial promises by Dankrad

Principles of trusted initialization by Vitalik

The originator and builder in the protocol are separated

Today's consensus node (miner) and the merged consensus node (verifier) have different roles. They create the actual blocks and then submit them to other consensus nodes for verification. Miners "vote" on top of the previous block, while verifiers vote directly on valid or invalid blocks after the merge.

PBS (Originator and Builder separation) separates these -- it explicitly creates a new protocol builder role. Specialized builders will put blocks together and bid for the originators (verifiers) to select their blocks. This can counter the centralized power of MEV.

Review Vitalik's "endgame" -- all roads lead to centralized block production and verification without trust and decentralization. PBS built on that. We need an honest builder to serve the network's effectiveness and censorship resistance (two would be more effective), but the validator group needs an honest majority. PBS keeps the role of the sponsor as simple as possible to support decentralization of the verifier.

Builders receive priority fee tips, plus any MEVs they can draw. In an efficient market, competitive builders will bid for the full value they can extract from the block (minus their amortization costs such as expensive hardware, etc.). All the value will trickle down to a decentralized set of verifiers -- that's what we want.

The exact PBS implementation is still under discussion, but a two-slot PBS might look like this:

- The builder makes a commitment to the block header with the bid

- The beacon block initiator selects the winning block head and bid. The initiator is unconditionally rewarded for winning the bid, even if the builder fails to produce the block body.

- Committees of Attestors identify winning block heads

- The builder discloses the subject of the bid

- Different witness committees select the subject winning the bid (if the builder winning the bid does not present the subject, the vote certifies its non-existence).

The initiator is selected from the group of validators using the standard RANDAO mechanism. We then use a commitment-disclosure strategy where the full body is not disclosed until the block head has been confirmed by the committee.

Promise - Disclosure is more efficient (sending hundreds of complete block bodies can overwhelm the BANDWIDTH of the P2P layer), and it also prevents MEV theft. If the builder submits their full block, another builder can see it, figure out its strategy, incorporate it, and quickly publish a better block. In addition, a complex initiator can detect the MEV policy being used and replicate it without compensating the builder. If this stealing of MEV becomes an equalizing force, it will incentivize the merger of builder and initiator, so we use a commitment and disclosure strategy to avoid this situation.

After the originator chooses the winning block head, the committee confirms it and solidifies it in the fork selection rule. The winning builders then publish the full "Builder block" body of their win. If it is published in time, the next committee will certify it. If they fail to publish on time, they still have to pay the originator in full (and lose all meVs and fees). This unconditional payment makes it unnecessary for the initiator to trust the builder.

The drawback of this "two-slot" design is delay. The merged blocks will be fixed at 12 seconds, so we need 24 seconds for the full block time (two 12-second slots) without introducing any new assumptions. 8 seconds per slot (16 seconds of block time) seems like a safe compromise, but research is ongoing.

Censorship Resistance The List, crList)

Unfortunately, PBS gives builders great ability to vet deals. Maybe builders just don't like you, so they ignore your deal. Maybe they're so good at their job that other builders give up, or maybe they'll charge a high price for the block because they really don't like you.

CrLists prevent this. The implementation is again an open design space, but "mixed PBS" seems to be the most popular. The builder specifies a list of all the eligible transactions they see in mempool, and the builder is forced to accept the package (unless the block is full).

- The initiator publishes the crList and a summary of the crList with all eligible transactions.

- The builder creates a proposed block body and then submits a bid that includes a hash of the crList summary to prove they have seen it.

- The originator accepts the bid and block head from the winning bidder (they have not yet seen the block body).

- Builders publish their blocks, including proof that they have included all the transactions in the crList, or that the block is full. Otherwise, the block will not be accepted by the fork selection rule.

- Witnesses (atTestors) check the validity of published subjects

There are still some important issues to be resolved here. One dominant economic strategy, for example, is for sponsors to submit an empty list. That way, with the highest bid, even the builders who should have been vetted could win the auction. There are ways around this (and other) problem, but I'm just emphasizing that the design here is not rock solid.

2 d KZG strategy

We saw how the KZG commitment allows us to commit data and prove that it has been properly extended. However, this is a simplification of how Ethereum actually works. It does not commit all the data in one KZG promise -- a block will use many KZG promises.

We already have dedicated builders, so why not just have them create a huge KZG commitment? The problem is, this requires a powerful supernode to refactor. We can accept the supernode requirements for initial construction, but we need to avoid making assumptions about rebuilding. We need ordinary entities to be able to handle rebuilding, so it's good to split the KZG commitment. Given the amount of data at hand, reconstruction may even be fairly common, or the underlying assumption in this design.

To make reconstruction easier, each block will contain m shard data encoded into m KZG promises. If you're not smart, this will result in a lot of sampling -- you'll do DAS on each shard block to make sure it's usable (m* K samples are required, where K is the number of samples per block).

So ethereum will use a 2D KZG strategy. We used the Reed-Solomon code again to expand from m promises to 2m promises.

We make it a 2D policy by extending additional KZG commitments (256-511 in this case) on the same polynomial as 0-255. Now we just need to do the DAS on the table above to ensure the availability of all shard data.

2D sampling requires 75% of the data to be available (as opposed to the 50% mentioned earlier), which means we need to take a much larger fixed number of samples. Whereas the previous simple version of the 1D strategy required 30 samples, 75 samples will be required to ensure a consistent probability of rebuilding a usable block.

Sharding 1.0 (corresponding to the 1D KZG commitment policy) only requires 30 samples, but you need to sample 64 slices and 1920 samples for a complete check. Each sample is 512 B, so it's:

(512 B x 64 slices x 30 samples) / 16 seconds = 60 KB/s bandwidth

In reality, verifiers are chosen at random, not by one person.

Merging blocks with the 2D KZG strategy makes full DA validation extremely easy. Only 75 samples need to be selected from a single consolidated block:

(512 B x 1 block x 75 samples) / 16 seconds = 2.5 KB/s bandwidth

Danksharding

PBS was initially designed to blunt the centralizing forces of MEV on the validator set. However, Dankrad recently took advantage of that design that it unlocked a far better sharding construct -- DS.

DS leverages the specialized builder to create a tighter integration of the Beacon Chain execution block and shards. We now have one builder creating the entire block together, one proposer, And one committee voting on it at a time. DS would be infeasible without PBS -- regular validators couldn't handle the Massive bandwidth of a block full of rollups "data Blobs:

PBS was originally designed to counter the centralized power of MEV in the verifier group. However, Dankrad recently took advantage of this design and came up with a better sharding solution, DS (Danksharding).

DS utilizes specialized builders to achieve closer integration between blocks and shards executed by the Beacon Chain. We now have a builder who can create the entire block; A proposer; And a committee to vote. Without PBS, DS is not feasible -- the average builder cannot have the huge bandwidth to satisfy blocks containing countless rollup data blocks.

Shard 1.0 includes 64 independent committees and sponsors that allow each shard to issue issues separately. Tighter integration enables us to ensure full data availability (DA) all at once. Data is still "sharded" in black boxes, but from a practical point of view, sharding starts to feel more like chunks of data, which is great.

Danksharding -- Honest majority verification

Let's look at how the verifier proves that the data is trustworthy:

This relies on a majority of honest verifiers -- as a single verifier, my columns and rows available are not enough to give me statistical confidence that the entire block is available. We need an honest majority to come to this conclusion. Decentralized verification is important.

Notice that this is different from the 75 random samples we talked about earlier. Private random sampling means that low-configured individuals will be able to easily check availability (for example, I can run a DAS light node and know the block is available). However, the verifier will continue to use the row and column approach to check availability and guide block rebuild.

Danksharding - reconstruction

As long as 50% of a single row or column is available, it can easily be completely reconstructed by a sample verifier. When they rebuild any missing blocks in a row/column, they reassign those blocks to orthogonal lines. This helps other verifiers rebuild any missing blocks from the rows and columns they intersect as needed.

Here the safety assumptions for rebuilding a usable block are:

There are enough nodes to perform the sampling request so that together they have enough data to rebuild the block

Synchronization assumptions between nodes that are broadcasting their respective block fragments

So, how many nodes is enough? A rough estimate of 64,000 individual instances is required (so far, there are more than 380,000). This is also a very conservative calculation that assumes no crossover between nodes running with the same verifier (far from it, as the nodes are limited to 32 ETH instances). If you sample more than 2 rows and 2 columns, you will increase the probability of a collective retrieval (Collectively) due to the crossover. This starts to scale quadratically -- if validators are running, say, 10 or 100 validators, the 64,000 requirement may drop by several orders of magnitude.

If the number of online validators starts to get very low, the DS can be set to automatically reduce the number of shard blocks. Therefore, the safety assumption will be lowered to a safe level.

Danksharding - Private random sampling of malicious majority security

We see that DS validation relies on honest majority to prove blocks. I, as an individual, cannot prove that a block is available by downloading several rows and columns. However, private random sampling can give this assurance without trusting anyone. This is what happens when the node discussed earlier checks 75 random samples.

DS will not initially include private random sampling, as this is a very difficult problem to solve on the network side (PSA: maybe you can help them!). .

It is important to note "private" because if an attacker de-anonymizes you, they can trick a small number of sample nodes. They can return only the exact chunk of data you asked for and hide the rest. So you don't know just from your own sampling that all the data has been provided.

Danksharding -- Key summary

The DS is very exciting, and not just the name. It finally realized Ethereum's vision of a unified settlement and DA layer. This close coupling between beacon block and shard can achieve the effect of not shard.

In fact, let's define why it is even considered "sharded". The only sharding here is the fact that the verifier is not responsible for downloading all the data. Nothing else.

So, if you're now questioning whether this is really shard, you're not crazy. That's why PDS (which we'll talk about shortly) is not considered "shard" (despite the word "shard" in its name, and yes, I know it's confusing). PDS requires each verifier to fully download all the chunks to prove their availability. The DS then introduces sampling, so individual verifiers download only certain pieces of it.

Minimal shards mean a simpler design than slice 1.0 (so faster delivery, right?). . The simplified contents include:

- The DS specification may be hundreds of lines less (thousands less for clients) than the Shard 1.0 specification.

- No more shard committees for infrastructure, committees only need to vote on the main chain

- No need to track individual shard block (BLOB) validation, now they are all validated or not validated in the main chain.

One good result of this is the market for consolidated fees for data. Sharding 1.0, with different blocks made by different originators, would have fragmented everything.

The elimination of sharding committees is also a powerful deterrent to bribery. DS verifiers vote on the entire block once per era, so the data is immediately validated by 1/32 of the entire group of verifiers (32 per era). Sharding 1.0 verifiers also vote once per era, but each sector has its own committee that needs to be reorganized. Therefore, each zone is only confirmed by 1/2048 verifier groups (1/32 divided into 64 zones).

As discussed, blocks combined with the 2D KZG commitment scheme also make DAS much more efficient. Shard 1.0 requires 60KB/s of bandwidth to check all DA for all shards, DS requires only 2.5KB/s.

There is another exciting possibility for DS -- synchronous calls between ZK-rollup and L1 Ethereum implementations. Transactions from the sharded data block can be immediately acknowledged and written to L1 because everything is generated in the same beacon chain block. Sharding 1.0 eliminates this possibility because of separate shard confirmation. This opens up exciting design space that could be very valuable for things like shared liquidity (for example, dAMM).

Danksharding -- Constraints on blockchain expansion

Modular layers can scale gracefully -- more decentralization leads to more scale. This is fundamentally different from what we see today. Adding more nodes to the DA layer can safely increase data throughput (that is, more space to allow rollup).

There are still limits to how scalable blockchain can be, but we can improve it by orders of magnitude compared to today. The base layer of security and extensibility allows implementations to be rapidly extended. Improvements in data storage and bandwidth will also improve data throughput over time.

It is certainly possible to exceed the DA throughput envisaged in this article, but it is hard to say where this maximum will fall. We don't have a clear red line, but we can cite ranges where it becomes difficult to support certain assumptions.

Data storage - This has to do with DA and data accessibility. The role of the consensus layer is not to ensure that data can be retrieved indefinitely, it is to make data available long enough for anyone willing to download it to satisfy our security assumptions. Then, it gets transferred anywhere -- which is comfortable, because history is 1 out of N trust assumptions, and we're not actually talking about that much data, that big of a plan. However, as throughput increases, it can fall into uncomfortable zones.

Verifier - the DAS needs enough nodes to rebuild the block together. Otherwise, attackers can wait around and only respond to the queries they receive. If these queries are not enough to rebuild the block, the attacker can withhold the rest and we're done. To safely increase throughput, we need to add more DAS nodes or increase their data bandwidth requirements. This is not a problem for the throughput discussed here. However, if throughput is increased by several orders of magnitude over this design, it can be uncomfortable.

Note that the builder is not the bottleneck. You need to generate KZG certificates quickly for 32MB of data, so you'll want a GPU or fairly powerful CPU with at least 2.5Gbit /s bandwidth. In any case, this is a specialized role and a negligible business cost for them.

Native Danksharding (IP-4844)

The DS is great, but we have to be patient. PDS is here to tide us over -- it implements the necessary forward compatible steps on a tight schedule (targeting the Shanghai hard fork) in order to provide orders of magnitude of expansion during the transition. However, it does not actually implement data sharding (that is, the validator needs to download all the data individually).

Today's rollup is stored using L1 CallData, which can be perpetuated on the chain. However, rollup only needs to use DA for a few short periods of time, so there is plenty of time for anyone interested to download it.

Ip-4844 introduces a new BLOB-carrying Transaction format, in which ROLLup will be used for future data storage. Blobs carry large amounts of data (about 125KB), and they are much cheaper than a similar amount of CallData. Data blobs are pruned from nodes after a month, reducing storage requirements. Enough time to satisfy our DA safety hypothesis.

For an extended background, the current Ethereum block size is generally about 90 KB on average (calldata is about 10 KB of that). PDS frees up more DA bandwidths for bloBs (target ~1MB, Max ~2MB) because they are pruned away after a month. They do not burden nodes all the time.

A BLOB is a vector of 4096 field elements, each 32 bytes long. PDS allows a maximum of 16 bloBs per block, while DS increases this to 256.

PDS DA bandwidth = 4096 x 32 x 16 = 2 MiB Per block. The target is 1 MiB

DS DA bandwidth = 4096 x 32 x 256 = 32 MiB Per block. The target is 16 MiB

Each step is an order of magnitude extension. PDS still requires consensus nodes to fully download data, so it is conservative. DS distributes the load of storing and propagating data between verifiers.

Here are some of the goodies that IP-4844 introduced on the way to the DS:

Carries transaction format data for the BLOB

Commitment to BLOB's KZG

All the execution-level logic required by DS

All execution/consensus cross-validation logic required by DS

Layer separation between beacon block validation and DAS BLOb

Most of the beacon block logic required by DS

Self-adjusting independent gas prices for BLOBS (multi-dimensional IP-1559 with index pricing rules)

DS will be added in the future:

PBS

DAS

2 d KZG strategy

Proof-of-custody or a similar protocol requires each verifier to verify the availability of a specific part of the fragment data for each block (approximately one month)

Note that these data bloBs are introduced as a new type of transaction in the execution chain, but they do not impose additional burdens on the executor. EVM only looks at the commitments attached to the data block. The executive-layer changes brought by IP-4844 are also forward compatible with the DS, and no further changes are required at this end. Upgrading from PDS to DS only requires changing the consensus layer.

In PDS, data blocks are downloaded entirely by consensus clients. The data block is now referenced, but not fully encoded in the beacon block body. Rather than embed the entire content in the body, the content of the BLOB is propagated separately as a "sidecar." Each block has a BLOB sidecar, which is fully downloaded in the PDS and is then DAS (Data availability sampling) by the DS verifier.

We discussed earlier how to commit bloBs using KZG polynomial commitments. However, instead of using KZG directly, IP-4844 implements what we actually use -- its versioned hash. This is a single 0x01 byte (representing the version) followed by the last 31 bytes of KZG's SHA256 hash value.

We do this to facilitate EVM compatibility and forward compatibility:

EVM compatibility - KZG promises to be 48 bytes, while EVM uses 32 bytes more naturally

Forward compatibility - If we switch from KZG to something else (such as STARKs for quantum resistance), the commitment can continue to be 32 bytes

Multi-dimensional IP-1559

The PDS eventually created a tailor-made data layer -- data blocks would get their own unique charging market, with independent floating gas prices and limits. So even if some NFT project sells a bunch of monkey land on L1, your rollup data costs won't go up (though proving settlement costs will). This shows that the primary cost of any rollup project today is to publish its data to L1 (rather than prove it).

The Gas fee market remains unchanged while the data block is added as a new market:

Blob fees are charged in gas, but it is a variable amount, adjusted according to its own EIP-1559 mechanism. The long-term average number of BLOBs per block should be equal to the target.

There are actually two parallel auctions -- one for computation and one for DA. This is a huge step forward in efficient resource pricing.

You can see some interesting designs. For example, it might make sense to change the current gas and BLOB pricing regime from linear EIP-1559 to the new exponential EIP-1559 regime. Current implementations are not averaging to our target block size. Current base cost stability is poor, resulting in observed gas usage per block exceeding the target by about 3% on average.

Part 2 history and state management

A quick review of the basic concepts:

History - everything that happened on the chain. You can just put it on your hard drive because it doesn't need quick access. This is one of N honest assumptions in the long run.

Status - a snapshot of all current account balances, smart contracts, and so on. Complete nodes (currently) require this data to validate transactions. It's too big for memory, and the hard drive is too slow -- it works well in solid-state drives. High-throughput blockchains have ballooned that state, growing much faster than the rest of us can keep up on our laptops. If everyday users can't keep that state, they can't fully verify, and decentralization is out of the question.

In short, these things get really big, so it's hard for you to run a node that has to hold this data. If running a node is too hard, the rest of us won't do it. That's bad, so we need to make sure that doesn't happen.

Calldata Gas Cost reduction and Calldata volume Limit (IP-4488)

PDS is a good stepping stone to DS, and it meets many of the final requirements. By implementing PDS within a reasonable time frame, the DS schedule can be brought forward.

An easier fix to implement is THE IP-4488. It's not very elegant, but it solves the immediate cost emergency. Unfortunately, it doesn't give steps to the DS, so it will inevitably have to be patched up later. If the PDS is starting to feel a little slower than we'd like, it might make sense to get through IP-4488 quickly (it's just a few lines of code changes) and then into the PDS six months later. We are free to seize the moment.

Ip-4488 has two main components:

Reduce the calldata cost from 16 gas per byte to 3 gas per byte

Added 1MB Calldata limit per block, plus an additional 300 bytes per transaction (theoretically around 1.4MB total maximum)

Restrictions need to be added to prevent the worst -- a block full of Calldata will reach 18MB, which is far beyond what Ethereum can handle. Ip-4488 increases ethereum's average data capacity, but due to this Calldata limit (30 million gas/16 gas per Calldata byte = 1.875MB), its burst data capacity actually decreases slightly.

Ip-4488 has a much higher sustained load than PDS because it's still CallData vs. PDS. Data blocks (which can be pruned away after a month). With IP-4488, the growth rate will increase meaningfully, but there will also be bottlenecks in operating nodes. Even if IP-4444 is implemented synchronously with IP-4488, it only reduces the execution payload history after one year. The lower sustained load of PDS is clearly preferable.

Qualify historical data in executing clients (IP-4444)

Ip-4444 allows customers the option of locally pruning historical data (including header, body, and receipt) over a year. It dictates that clients stop providing such pruned history data at the P2P layer. Pruning historical data allows customers to reduce users' disk storage requirements (currently hundreds of GB and growing).

This matter is already important, but if IP-4488 is implemented, it will basically be mandatory (since it greatly increases historical data). We hope this can be done in a relatively short period of time. Eventually some form of historical expiration is needed, so now is a good time to deal with it.

Full synchronization of the chain requires a history, but is not required for validating new blocks (only state is required). Thus, once the client is synchronized to the top of the chain, the history data is retrieved only when explicitly requested via JSON-RPC or when some point attempts to synchronize the chain. With the implementation of IP-4444, we need to find alternative solutions for these.

Clients would not be able to use DevP2P for "full synchronization" as they do today -- instead they would "checkpoint sync" from a weak subjective checkpoint, which they would treat as a genesis block.

Note that weak subjectivity is not an extra assumption - this is what happens when you switch to PoS. Because of the possibility of remote attacks, this requires the use of effective, weakly subjective checkpoints for synchronization. The assumption here is that the customer will not synchronize from an invalid or old, weak subjective checkpoint. This checkpoint must be within the period in which we start pruning the history data (in this case, within a year), otherwise the P2P layer will not be able to provide the required data.

This will also reduce network bandwidth usage as more customers adopt lightweight synchronization strategies.

Restoring historical Data

IP-4444 will prune historical data after a year sounds good, while PDS prune blob faster (after about a month). These are necessary actions because we cannot require nodes to store all data and remain decentralized.

Ip-4488 -- May require about 1MB per slot in the long term, adding about 2.5TB of storage per year

PDS -- Aim for about 1MB per slot, adding about 2.5TB of storage per year

DS -- Aim for about 16MB per slot, adding about 40TB of storage per year

But where did the data go? Do we still need them? Yes, but note that losing historical data is not a risk to protocols -- just to individual applications. So the work of ethereum's core protocol should not involve permanently maintaining all of this agreed data.

So who will store the data? Here are some potential contributors:

Individual and institutional volunteers

Block browsers (such as EtherScans.io), API vendors, and other data services

Third-party indexing protocols, such as TheGraph, can create incentivized markets where customers pay servers for historical data with Merkle proof

Customers in the Portal Network (currently under development) can store random portions of the chain's history, and the Portal Network automatically directs data requests to the nodes that own the data

BitTorrent, for example, automatically generates and distributes a 7GB file containing daily blocks of BLOB data

Specific application protocols, such as ROLLup, can require their nodes to store historical portions relevant to their application

The long-term data storage problem is a relatively easy one because it is one of N trust assumptions, as we discussed earlier. This issue is years away from becoming the ultimate limit on blockchain scalability.

Weak Statelessness

Ok, so we've got a pretty good handle on managing history, but what about states? This is actually the main bottleneck for improving Ethereum TPS right now.

Complete nodes take the pre-state root, perform all transactions in a block, and check that the post-state root matches what they provide in the block. In order to know if these trades are valid, they currently need to be validated by the state on their counterparty.

Go stateless -- it doesn't need the state at hand to play its role. Ethereum is working towards being "weakly stateless", meaning that states are not required to validate blocks, but are required to build them. Validation becomes a pure feature -- give me a completely isolated block and I can tell you if it works. It basically looks like this:

Because of PBS, the builder still needs state, which is acceptable -- they'll be more centralized and highly configured entities anyway. Our focus is on decentralization of verifiers. Weak statelessness gives the builder a little more work and the verifier a lot less work. Very good value.

We use witnesses to achieve this magical stateless execution. Witnesses are proofs of correct state access, and the builder will start including these proofs in each block. Verifying a block doesn't actually require the entire state -- you just need the state read or affected by the trading platform in that block. Builders will start to include fragments of the states affected by the transaction in a given block, and they will use witnesses to prove that they accessed those states correctly.

Let's take an example. Alice wants to send Bob an ETH. To verify the block for this transaction, I need to know:

Before the transaction - Alice has 1 ETH

Alice's public key - so I can know that the signature is correct

Alice's Nonce code - so I can tell that the transactions were sent in the correct order

After executing the transaction, Bob gains 1 ETH and Alice loses 1 ETH

In a weakly stateless world, the builder adds the above witness data to the block and verifies its accuracy. The verifier receives the block, executes it, and decides if it is valid. That's ok.

From the verifier's point of view, there are some implications:

The huge SSD requirement to maintain state is gone -- a key bottleneck for the current expansion.

Bandwidth requirements will increase a bit because you are also downloading witness data and certificates now. This is a bottleneck in the Merkle-Patricia tree, but not of the sort found in Verkle Tries.

You still execute the transaction to fully verify. Statelessness acknowledges the fact that this is not currently the bottleneck for scaling Ethereum.

Weak statelessness also allows Ethereum to relax its self-limiting execution throughput, and state bloat is no longer a pressing issue. Raising the gas limit to three times might make sense.

At this point, most users' execution will be on L2, but higher L1 throughput is beneficial even for them. Rollup relies on Ethereum for DA (publishing to shards) and settlement (which requires L1 execution). As Ethereum expands its DA layer, the amortized cost of publishing proof may account for a larger share of rollup costs (especially for ZK-rollup).

Verkle Tries

We have deliberately skipped over how these witnesses work. Ethereum currently uses the Merkle-Patricia tree to store state, but the Merkle proof required is too large for these witnesses to be feasible.

Ethereum will switch to Verkle tries to store states. Verkle proved much more efficient, so they could be used as viable witnesses to achieve weak statelessness.

First let's review what a Merkle tree looks like. Each transaction starts with a hash -- these hashes at the bottom are called leaves. All hashes are called "nodes," and they are the hashes of the next two child nodes. The resulting hash value is the Merkle root.

This data structure is very helpful in proving the integrity of the transaction without having to download the entire tree. For example, if you want to verify that the transaction H4 is included, you need H12, H3, and H5678 in the Merkle proof. We have H12345678 from the block header. Thus, a lightweight client can request these hashes from a complete node and then hash them together according to the route in the tree. If the result is H12345678, then we have successfully proved that H4 is in the tree.

But the deeper the tree, the longer the path to the bottom, so you need more items to prove it. Therefore, shallow and wide trees are better suited for efficient proofs.

The problem is, if you try to make the Merkle tree wider by adding more children under each node, it's very inefficient. You need to hash all the sibling hashes together to touch the whole tree, so you need to accept more sibling hashes for Merkle proof. That would make the scale of the proof huge.

That's where the high efficiency vector promise comes in. Note that the hashes used in Merkle trees are actually vector promises -- they're just bad promises that only effectively promise two elements. So we want vector commitment, we don't need to accept all sibling nodes to verify it. Once we have that, we can make the tree wider and reduce its depth. This is how we achieve efficient proof size -- reducing the amount of information that needs to be provided.

A Verkle trie is similar to a Merkle tree, but it uses efficient vector promises (hence the name "Verkle") instead of simple hashes to promise its children. So the basic idea is that each node can have many children, but I don't need all of them to verify the proof. Whatever the width, this is a proof of constant size.

In fact, we've shown you a good example of this possibility before -- a KZG commitment can also be used as a vector commitment. In fact, that's what ethereum developers originally planned to use here. They later turned to Pedersen commitment to accomplish a similar role. It will be based on an elliptic curve (in this case Bandersnatch) that promises 256 values (much better than two!). .

So why not build a tree with a depth of one, as wide as possible? This is a good thing for the examiner, because he now has a super compact proof. But there is a practical tradeoff that the verifier needs to be able to compute the proof, and the wider it is, the harder it is. Therefore, Verkle tries will be between extremes of 1 to 256 value widths.

State overdue

Weak statelessness removes the state bloat constraint from the verifier, but the state does not magically disappear. The costs of transactions are capped, but they add a permanent tax to the network by increasing status. State growth remains a permanent drag on the network. We need to do something to solve this fundamental problem.

This is why we need the state to expire. Long periods of inactivity (say, a year or two) are cut off, even if the block builder should have included things. Active users don't notice anything changing, and we can discard heavy states that are no longer needed.

If you need to restore an expired state, you just need to show a proof and reactivate it. This goes back to one of N storage assumptions. As long as someone still has a full history (block browser, etc.), you can get what you need from them.

Weak statelessness will weaken the base layer's immediate need for state expiration, but in the long run, especially as L1 throughput increases, this is fine. This would be a more useful tool for high-throughput rollups. L2 state will grow at a much higher rate, so much so that it will drag down even high-configuration builders.

Part 3 Everything is MEV's pot

PBS is necessary for a secure implementation of DS (Danksharding), but remember that it was originally designed to counter the centralized power of MEV. You'll notice a recurring trend in Ethereum research today -- MEV is now front and center in cryptocurrency economics.

When designing blockchains, keeping MEV in mind is key to maintaining security and decentralization. The basic protocol-level approach is:

Minimize harmful MEVs (e.g., finality, single secret leader selection)

Democratize the rest (for example, MEV-Boost, PBS, MEV Smoothing)

The rest must be easily captured and propagated among verifiers. Otherwise, it centralizes the verifier group by not being able to compete with complex searchers. This is exacerbated by the fact that the MEV will account for a much higher proportion of the verifier's award after the merger (pledge issuance is much lower than the inflation given to miners). This point cannot be ignored.

Today's MEV supply chain

Today's order of events looks like this:

Mineral pools play a builder's role here. MEV searchers use Flashbots to forward bundles of deals (along with their respective bids) to the mining pool. Pool operators put together a complete block and pass the block heads to individual miners. Miners use PoW to prove blocks, which are given weight in fork selection rules.

Flashbots were created to prevent vertical integration of the entire stack -- which would open the door to censorship and other pesky external factors. By the time Flashbots was born, the mine pool had already begun making exclusive deals with trading companies to extract MEVs. However, Flashbots gave them an easy way to aggregate MEV bidding and avoid vertical integration (by implementing MEV-Geth).

After the merger, the pool will disappear. We made it relatively easy for ordinary home validators to participate. This requires someone to take on the role of a professional builder. Family validators may not be as good at catching MEVs as hedge funds with big salaries. If left unchecked, ordinary people can't survive the competition, which will centralize the verifier group. If structured properly, the agreement can transfer MEV revenues to the pledge proceeds of the daily verifier.

MEV-Boost

Unfortunately, the PBS in the agreement was simply not ready at the time of the merger. Once again, Flashbots offers a rescue solution -- meV-Boost.

By default, merged verifiers receive transactions directly from the public mempool to their executing clients. They can package these transactions, give them to consensus clients and broadcast them to the network. (If you need a refresher on how ethereum's consensus and execution clients work together, I covered that in Part 4).

But your mom and mass verifiers don't know how to extract MEV, as we discussed, so Flashbots is offering an alternative. Mev-boost will tap into your consensus client, allowing you to outsource specialized block building. Importantly, you still retain the right to use your own execution client.

MEV searchers will continue to play the role they do today. They will run specific strategies (statistical arbitrage, atomic arbitrage, sandwich strategy, etc.) and bid for their packaged blocks to be included. The builder then aggregates all the packaged blocks they see, as well as any private order flows (for example, from Flashbots Protect), into the best complete block. The builder passes only the block header to the verifier through a relay running on the MEV-Boost. Flashbots intends to run Repeaters and Builders and plans to decentralize over time, but the process of whitelisting other builders may be slow.

Mev-boost requires the verifier to trust the relay, that is, the consensus client receives the header and signs it before the block body becomes visible. The purpose of relay is to prove to the initiator that the principal is valid and exists so that the verifier does not have to trust the builder directly.

When the PBS within the protocol is ready, it aggregates what MEV-Boost has to offer in the meantime. PBS provides the same separation of powers, making it easier for builders to decentralize and for sponsors to trust no one.

Committee-driven MEV Smoothing

PBS has also made possible a cool idea -- committee-driven MEV Smoothing.

We see that the ability to extract MEV is a centralizing force for the group of examiners, but so is distribution. The high variability of MEV rewards per block encourages the formation of verifiers in order to smooth the returns over time (as we see in mining pools today, albeit to varying degrees).

The default is that the actual block initiator gets the full payment from the builder. MEV smoothing will take some of that money and give it to many verifiers. A committee of verifiers will examine the block submitted and certify that it is indeed the highest bidder. If all goes well, the block goes through the process and the award is divided between the committee and the sponsor.

This solved another problem -- out-of-band bribes. Promoters may be incentivize to submit a suboptimal block and accept outright out-of-band bribes to hide payments they receive from someone. The certificate binds the promoter.

Intraprotocol PBS is a prerequisite for MEV smoothing. You need to have an awareness of the builder's market and the specific offers being submitted. There are a few open research questions, but without getting in the way, this is an exciting proposition and critical to ensuring decentralized verifiers.

Single-slot Finality

It's good to get the end result quickly. Waiting 15 minutes is not ideal for user experience or cross-chain communication. More importantly, it is a MEV recombination problem.

In the post-Ethereum merger era, there will be more powerful validation than there is now -- thousands of verifiers certify each block, instead of miners competing against each other and potentially mining at the same block height without voting. That would make restructuring quite difficult. Still, that's not really the final word. If the last block has some tempting MEVs, the verifier may be tempted to try to regroup the chain and eat the reward.

Single slot clappers eliminate this threat. Reversing a completed block requires at least one-third of the verifiers, and their shares are immediately cut (millions of ETH).

I won't go into too much detail about the underlying mechanisms here. A single slot tablet is a very distant part of ethereum's roadmap, it's an open design space.

In today's consensus protocol (no single slot cutters), Ethereum only needs 1/32 of a verifier to prove each slot (12,000 out of more than 380,000 currently). Extending this voting to a full group of verifiers with BLS signature aggregation in a single slot requires more work. That would compress hundreds of thousands of votes into a single validation.

Vitalik lists some interesting solutions, check them out here.

Single Secret Leader Election

SSLE is designed to patch up another MEV attack vector that we will face after the merger.

The list of beacon chain verifiers and the list of upcoming leader selections is public and it is fairly easy to de-anonymize them and map their IP addresses. It should be easy for you to see the problem here.

More sophisticated verifiers can use some tricks to better hide themselves, but regular verifiers will be particularly vulnerable to being dug up and subsequently DDOSd. This can easily be exploited by meVs.

Let's say you're the initiator of block N, and I'm the initiator of block N +1. If I know your IP address, I can easily DDOS you and make you unable to generate your blocks due to timeout. Now I can capture two blocks of MEV and double my return. The elastic block size of IP-1559 (maximum gas per block is twice the target size) exacerbates this situation, so I can cram what should have been a two-block transaction into my single block, which is now twice as long.

In short, family validators can simply forgo validation because they will be attacked. SSLE prevents this attack by making it so that no one but the originator knows when their turn is coming. This will not take effect at the time of the merger, but hopefully it will happen sooner rather than later.

Part 4 The secret of the merger

Okay, actually, I've been joking up there. I really think (hope) that consolidation will come relatively soon.

Something so exciting, I feel like I have to say something. This is the end of your Crash course in Ethereum.

The combined client

Today, you run a singleton client (Go Ethereum, Nethermind, etc.) to handle everything. Specifically, a full node does two things:

Execution -- Each transaction in the block is executed to ensure validity. Using the pre-state root, perform the various operations and check that the resulting post-state root is correct

Consensus - Verify that you have done the most work on the longest (highest PoW) chain (aka Satoshi Consensus).

The two are inseparable, because the complete node follows not only the longest chain, but also the longest effective chain. This is why they are full nodes rather than light nodes. Even under 51% attacks, the full node will not accept invalid transactions.

Beacon chain is currently only running consensus, giving PoS a trial run, but not executed. Eventually, terminal Total difficulty will be determined, at which point the current Ethereum execution blocks will be merged into beacon chain blocks, merging into one chain:

However, the complete node will run two separate clients under the black box and interoperate.

Execution client (F.K.A. Eth1 client) -- The current Eth 1.0 client continues to process execution. They handle blocks, maintain mempools, and manage and synchronize state. PoW was deprecated.

Consensus client (F.K.A. Eth2 client) -- The current beacon chain client continues to process PoS consensus. They track the header, communicate and prove the block, and receive rewards from the verifier.

The client receives the block of the beacon chain, executes the client to run the transaction, and then the consensus client will follow the chain if everything is checked correctly. You will be able to mix and match execution and consensus clients of your choice, all of which support interoperation. A new engine API will be introduced for communication between clients:

Or something like this:

The consensus after the merger

Today's Satoshi consensus is simple. Miners create new blocks and add them to the longest observed effective chain.

The merged Ethereum turned to GASPER -- a combination of Casper FFG (clapboard tool) and LMD GHOST (fork selection rule) to reach consensus. In a word -- this is a LiVENESS favoring consensus, not a security one.

The difference is that security-enabled consensus algorithms (such as Tendermint) stop when they can't get the necessary votes (in this case, the verifier group). Pro-activity chains (such as PoW + Nakamoto Consensus) continue to build an optimistic ledger no matter what, but without enough votes they can't finish the deal. Bitcoin and Ethereum today will never reach a final decision -- you just assume that after enough blocks, refactoring won't happen again.

However, Ethereum will also go through regular checkpoints to make the final decision if enough votes are cast. Each instance of 32 ETH is an independent verifier, and there are more than 380,000 beacon chain verifiers. The total era consists of 32 slots, and all verifiers are split to verify one slot within a given era (meaning about 12,000 verifiers per slot). The fork selection rule LMD Ghost then determines the current head based on these proofs. A new block is added after each slot (12 seconds), so the total era is 6.4 minutes. Generally, after two epochs, the final result is achieved with the necessary number of votes (so every 64 slots, although it may need as many as 95).

Conclusion moment

All roads lead to the end game of centralized block production, decentralized untrusted block validation, and censorship resistance. Ethereum's roadmap highlights this vision.

Ethereum aims to be the ultimate unified DA and settlement layer -- massively decentralized and security-based, with scalable computing on top. This is the condensing of cryptographic assumptions down to one powerful layer. A unified modularity (or is it now decentralized?) While capturing the highest value of the overall L1 design -- leading to a monetary premium and economic security, as I recently reported (now publicly available).

I hope you get a clearer sense of how ethereum research is intertwined. It's very cutting edge, all the pieces are in flux, and it's not easy to finally figure out the big picture. You need to keep up.

In the end, it all comes back to that single vision. Ethereum gives us a compelling path to massive scalability while remaining true to the values that we care so much about in this space.

Special thanks to Dankrad Feist for his review and insights.

The original link

Welcome to join the official BlockBeats community:

Telegram Subscription Group: https://t.me/theblockbeats

Telegram Discussion Group: https://t.me/BlockBeats_App

Official Twitter Account: https://twitter.com/BlockBeatsAsia

Forum

Forum Finance

Finance

Specials

Specials

On-chain Eco

On-chain Eco

Entry

Entry

Podcasts

Podcasts

Activities

Activities

OPRR

OPRR